Superintelligence Strategy Response

Introduction

Dan Hendrycks, Eric Schmidt, and Alexandr Wang's recent Superintelligence Strategy provides an entirely new lens through which to view the geopolitical, socioeconomic, and legal implications of superintelligence. Most importantly, they introduce the concept of MAIM, or Mutual Assured AI Malfunction. This idea, like MAD (Mutually Assured Destruction) before it, provides a rational—albeit unnerving—tightrope for humanity to walk in order to survive the emergence of machines capable of global-scale destruction.

The basic premise of MAIM is that countries will be forced to sabotage AI systems which threaten their sovereignty.

I do agree with the authors, after much deliberation, that MAIM is a tractable way to avoid the biggest risk posed by AI: that of a catastrophic loss of control resulting from autonomous recursive self-improvement. And while a MAD like framework for AI may sound misplaced at first, as AI and nuclear weapons are so different, the similarities with respect to their effect on the global balance of power present a similar dynamic. A key difference however is that MAIM attacks need only cause malfunction, and so could be actually carried out without igniting global catastrophe.

The Great Displacement

One crucial extension I propose is that MAIM must also address unbridled competition that will lead to the excision of most human workers from the economy. Unchecked competition, in the soon-to-be reality of capable AI agents and robots, will drive most resources into increasing levels of automation and away from the humans who get automated. Hendrycks et al. talk about taxes as a possible solution, however, countries that don't tax will outcompete those that do, leaving little left for humans no longer producing economic value. We will therefore need coordination between countries, which can be grounded in MAIM, to ensure that resources remain available for displaced workers. This latter task is more difficult and will require careful timing against other forces as we'll get into. But MAIM forms a seemingly necessary foundation of forced cooperation upon which other higher-level coordination, like creating standards for global wealth redistribution, can occur.

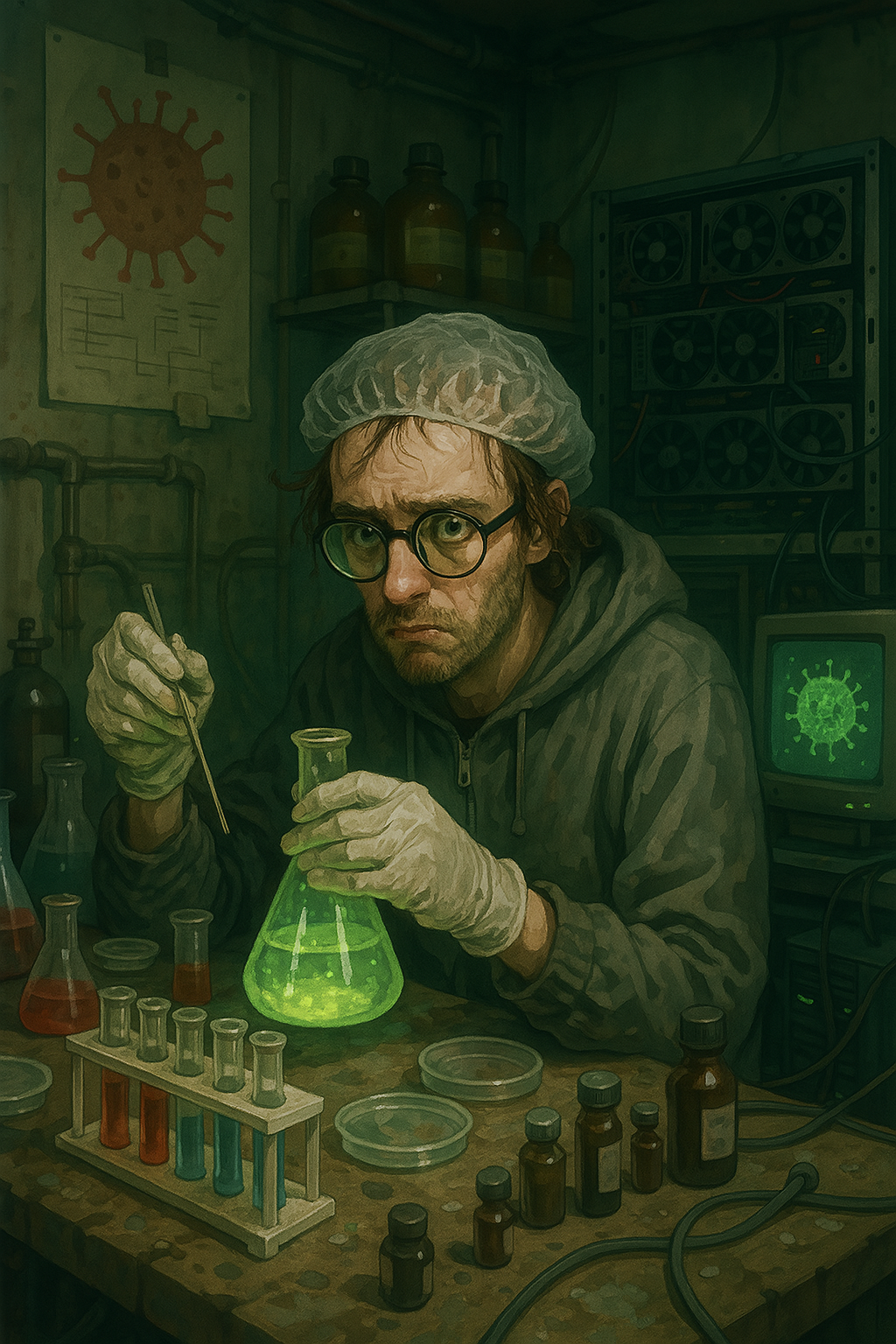

Supervirus Creation: The Primary Catastrophic Misuse Concern

Another topic discussed at length in the paper is that of catastrophic misuse of AI by rogue actors. The biggest risk here, in my opinion, is the creation of a supervirus that could cause untold suffering and death across the world. To address this risk, the authors primarily recommend nonproliferation: restricting the release of open-weight models and implementing safeguards on closed-weight models to prevent misuse. However, I do not think we can rely on nonproliferation to prevent catastrophic misuse of bioweapons as:

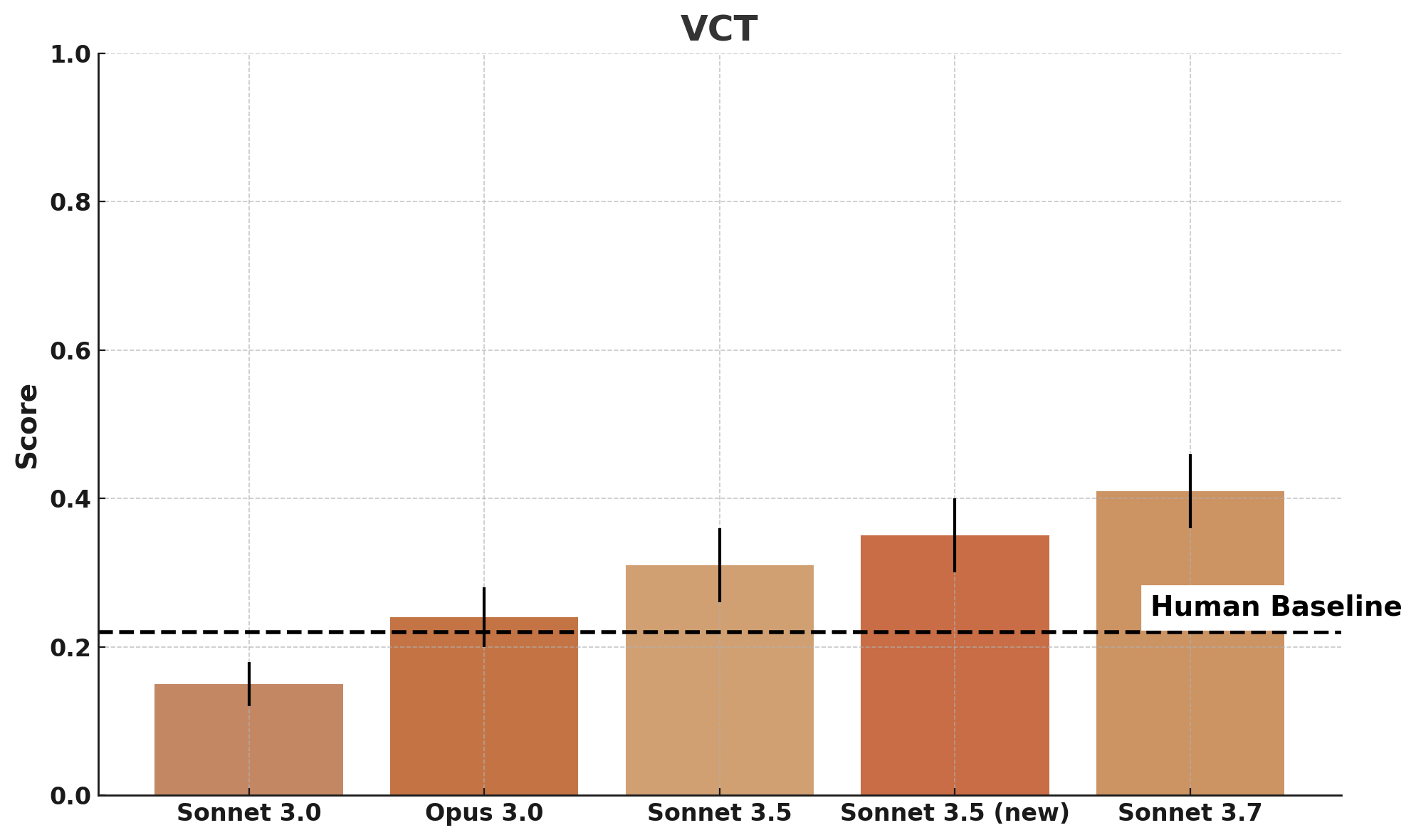

- Current models are already providing uplift in virus creation ability (see Figure 1 below)

- Restricting the digital distribution of artifacts with such ubiquitously strong demand is unrealistic

Extending MAIM

Automate or Die

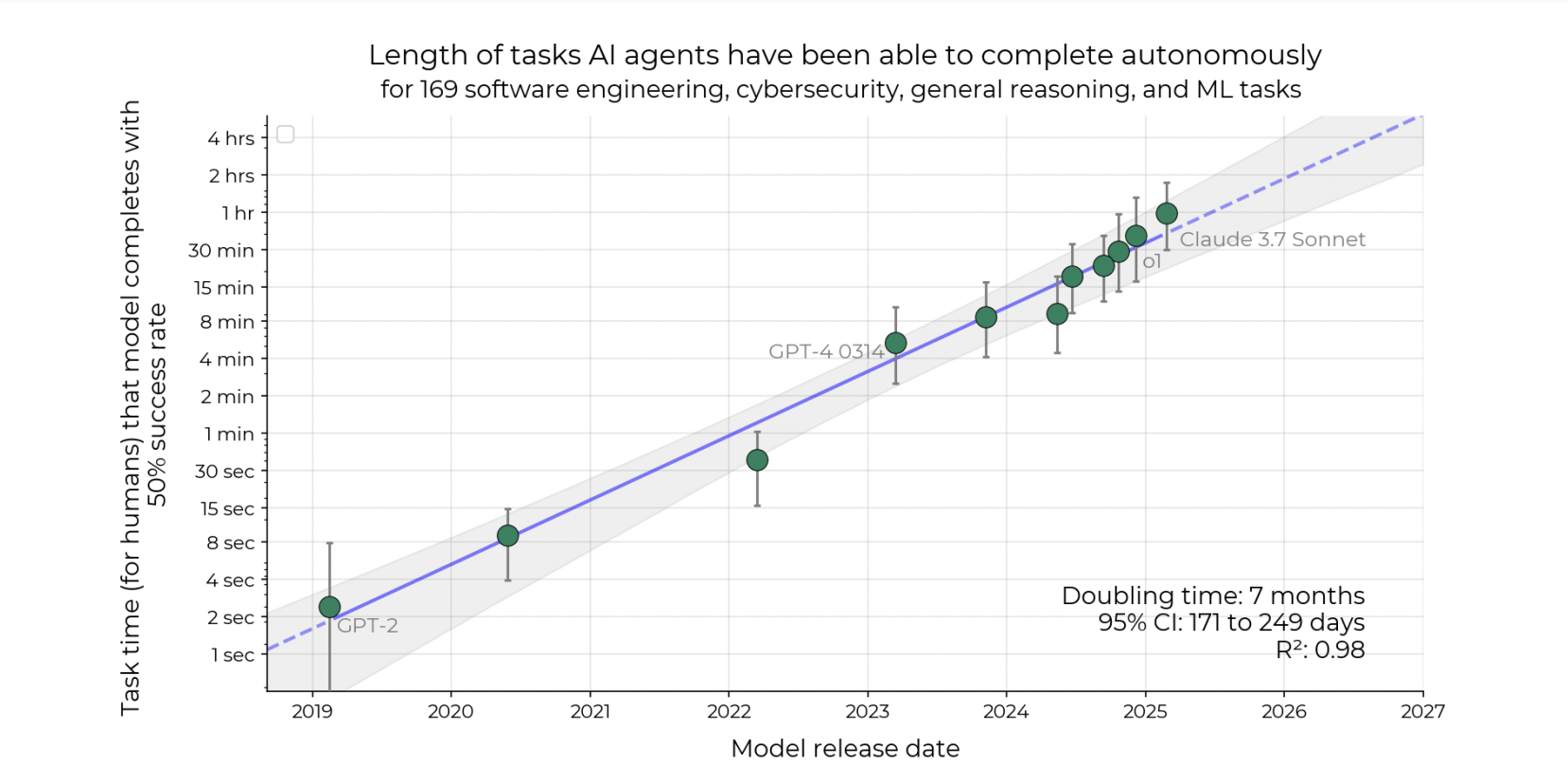

The competitive pressures inherent in AI development create a powerful incentive to automate tasks previously performed by humans. Retaining 'human-in-the-loop' processes, while potentially desirable for control, will likely become a significant competitive disadvantage in terms of speed and efficiency. This automation wave is poised to begin with white-collar jobs, representing roughly a billion, or 50%, of the world's workforce. Trends like the rapid doubling of automatable task lengths roughly every seven months, seen in Figure 2 below, show the exponential progress the current generation of models has already made.

The Land of Agents

Preliminary indicators suggest the onset of this automation process, evidenced by observed decelerations in hiring for roles such as software engineering. Furthermore, the next generation of reasoning models, driven by reinforcement learning, targets coding, computer-use, and robotics as these tasks can be easily verified without a human in the loop. Through the inclusion of general robotics, this new generation of AI models will have the potential to automate both white and blue-collar workers, representing 75% of the total global workforce, in as soon as 3 to 4 years.

The Collapse of Demand-Side Economics

Such a mass displacement would threaten to fundamentally alter our economic structure. Without intervention, wealth and resources are likely to concentrate dramatically in the hands of entities controlling the most capable AI systems—e.g. puppet CEOs whose primary function becomes maximizing reinvestment into further automation to maintain competitiveness. In such a scenario, traditional demand-side economics falters as consumer spending from displaced workers plummets. And while there will be pressure on governments to prop up consumption via redistribution, competitive dynamics could starve resources available for the unemployed, leaving their buying power limited to that which will prevent revolt. Also as suppliers become more efficient and demand drops, prices will fall drastically. But there's nothing to stop incomes from falling just as fast or faster. This as again, value creation has moved from the labor market to the AI market. Compounding that, AI's dual-use nature means that falling behind translates directly into military vulnerability, further intensifying the pressure to automate. And again, we don't have to guess about whether this will happen, we can already see these trends with 2025 U.S. professional services hires recording 12 year lows.

MAIM for UBI

Hendrycks et al. propose value-added taxes on AI services to redistribute wealth. However, this seems quite optimistic as nations which implement taxes run the risk of being outcompeted by those that don't. This is where extending MAIM becomes crucial. Just as MAIM provides a framework for forced cooperation to prevent recursive self-improvement, it should also provide leverage to enforce international coordination on wealth redistribution. The slowdown in race dynamics that results would leave resources available for things other than AI development, such as critically, redistribution to laid off workers.

Securing Against MAIM is Escalatory

It's important to note that sufficiently securing AI development against espionage and cyber attack may only serve to escalate the likelihood that states deploy kinetic weapons on datacenters or power grids. Especially as, without espionage, states will be forced to assume that a destabilizing level of AI capability is being developed outside of their purview. Inducing AI malfunction through non-kinetic vectors, such as cyber attacks, remains considerably less escalatory than resorting to conventional military strikes on physical infrastructure. The result is essentially a strong incentive for frontier efforts to be made transparent between superpowers.

Goldilocks Acceleration

Applying MAIM to regulate the pace of AI development and deployment requires careful calibration. If MAIM-induced constraints slow down frontier AI progress *too* drastically among leading actors, there's a risk that efficiency gains in more widely accessible, less advanced AI systems, like open models, could eventually enable distributed recursive self-improvement. This risk calls for a larger, decentralized version of MAIM—potentially involving peer-to-peer monitoring and more distributed governance. Since such a system lacks historical precedent and appears significantly more challenging to implement, it will be important to gauge the level of MAIM-allowed progress accordingly.

To Infinity and Beyond

Since AI generated wealth can grow super-exponentially relative to today's wealth, the monetary needs of humans will trend towards zero in relative terms. This countertrend could mean that MAIM will mostly be needed until which time that all humans can be given a baseline level of livelihood. Beyond that threshold, a progressively smaller fraction of the exponentially expanding resources generated by hyper-efficient AI systems would suffice to support a wildly extravagant standard of living for everyone. And, if and when we merge with AI, the line between us will blur—serving to make redistribution a moot point.

Bioweapon Defense

Nonproliferation

Hendrycks et al. place significant emphasis on avoiding catastrophic misuse, particularly the engineering of superviruses, through nonproliferation. Their strategy involves tighter controls on the release of open-weight models and enhanced security for closed-weight models. They dismiss defense as a solution, drawing parallels to historical challenges in defense against nuclear weapons, noting:

Historical efforts to shift the offense-defense balance illustrate inherent challenges with WMDs. During the Cold War, the Strategic Defense Initiative aimed to develop systems to intercept incoming nuclear missiles and render nuclear arsenals obsolete. Despite significant investment, creating an impermeable defense proved unfeasible, and offense remained dominant.

Bioweapons are Not Nukes

However, relying primarily on nonproliferation for AI-enabled bioweapons faces several critical challenges that differentiate it from nuclear nonproliferation. Firstly, bioweapons spreading mechanism allows their impact to be substantially thwarted by early detection and response, unlike the immediate finality of a nuclear attack. Secondly, AI models are fundamentally digital information. Unlike the complex physical infrastructure and materials required for nuclear weapons, AI models can be distributed globally almost instantly at near-zero marginal cost. Finally, the overwhelming majority of applications for open models are beneficial, driving innovation and utility across numerous domains. So attempting to strictly control their distribution would require an extradordinarily difficult and unpopluar effort as compared to nuclear materials.

Bioweapon Defense Challenges

Given these difficulties with nonproliferation, a greater focus should be placed on differential technological development, specifically bolstering bioweapon defense capabilities relative to offensive ones. This involves investing heavily in technologies like advanced pathogen detection, monitoring, and rapid response systems. While detecting known pathogens is relatively mature, identifying novel or engineered threats poses a greater challenge. Technologies like wastewater monitoring using broad metagenomic sequencing show promise, but face hurdles such as:

- Most current systems are optimized for known pathogens, not novel ones

- Metagenomic data is inherently noisy, consisting of a wide mix of DNA from different sources

- Coverage is often limited to urban areas, neglecting rural populations

- Lack of standardization hinders comparability and integration of data

- Interpreting vast, complex datasets requires significant computational power and expertise

- A large portion of sequencing data constitutes viral dark matter – sequences from unknown viruses

- Distinguishing harmless environmental microbes from genuine threats, and determining which viruses can infect humans, remains complex

Differential AI

Crucially, many of these challenges in biodefense are fundamentally information processing problems. This is precisely where AI can offer transformative solutions. AI can significantly enhance our ability to sift through noisy metagenomic data, identify faint signals of novel pathogens, predict protein structures and functions, accelerate genome assembly, and potentially even assist in designing rapid diagnostic tools and countermeasures like vaccines or antivirals.

Moreover, strengthening pathogen defense and accelerating vaccine/treatment development is vital regardless of the AI misuse risk, as starkly demonstrated by the COVID-19 pandemic. Natural pandemics remain a significant threat to global health and stability. Investments in biodefense thus serve a crucial dual purpose. Encouragingly, spurred partly by COVID-19, progress is being made in areas like genomic sequencing, rapid vaccine platforms (like mRNA), and diagnostic technologies, creating a foundation for enhanced resilience. Prioritizing and accelerating these defensive technologies, leveraging AI's analytical power, offers a more robust and achievable path to mitigating catastrophic biological risks than relying solely on controlling AI model proliferation.

Conclusion

The emergence of superintelligence presents humanity with unprecedented challenges and opportunities. The MAIM framework, while initially unsettling, offers a pragmatic approach to maintaining global stability in the face of rapidly advancing AI capabilities. However, this framework must be extended beyond its original scope to address both the existential risks of AI and the socioeconomic challenges of widespread automation.

The dual nature of AI—as both a potential existential risk and a transformative economic force—requires a balanced approach to regulation and development. While MAIM provides a foundation for international cooperation, it must be carefully calibrated to prevent both excessive constraints that could lead to dangerous distributed capabilities and insufficient controls that might enable catastrophic misuse.

The bioweapon defense strategy, emphasizing differential technological development over strict nonproliferation, represents a more realistic and effective approach to mitigating biological risks. This strategy not only addresses AI-enabled bioweapon threats but also strengthens our overall resilience against natural pandemics.

The path forward requires unprecedented international cooperation, careful calibration of AI development pace, and significant investment in defensive capabilities. Despite the differences I list above, I agree with the main points in Superintelligence Strategy and will repeat their conclusion here as my own:

Some observers have adopted a doomer outlook, convinced that calamity from AI is a foregone conclusion. Others have defaulted to an ostrich stance, sidestepping hard questions and hoping events will sort themselves out. In the nuclear age, neither fatalism nor denial offered a sound way forward. AI demands sober attention and a risk-conscious approach: outcomes, favorable or disastrous, hinge on what we do next.

States that act with pragmatism instead of fatalism or denial may find themselves beneficiaries of a great surge in wealth. As AI diffuses across countless sectors, societies can raise living standards and individuals can improve their wellbeing however they see fit. Meanwhile leaders, enriched by AI's economic dividends, see even more to gain from economic interdependence and a spirit of détente could take root. During a period of economic growth and détente, a slow, multilaterally supervised intelligence recursion—marked by a low risk tolerance and negotiated benefit-sharing—could slowly proceed to develop a superintelligence and further increase human wellbeing. By methodically constraining the most destabilizing moves, states can guide AI toward unprecedented benefits rather than risk it becoming a catalyst of ruin.

Contact

Corrections

April 12, 2025: A previous version of this article incorrectly referred to white collar "job openings" reaching a 12-year low. The text has been corrected to refer to "hires" and now links directly to the BLS statistics page.